Api Gateway Upload File to S3 and Run Lambda

So you lot're building a Rest API and you need to add back up for uploading files from a web or mobile app. You also demand to add together a reference to these uploaded files against entities in your database, along with metadata supplied by the client.

In this article, I'll prove you lot how to practice this using AWS API Gateway, Lambda and S3. We'll apply the example of an event direction web app where attendees tin login and upload photos associated with a specific event along with a championship and description. We volition employ S3 to store the photos and an API Gateway API to handle the upload request. The requirements are:

- User tin login to the app and view a list of photos for a specific event, along with each photograph'southward metadata (date, championship, description, etc).

- User tin can only upload photos for the event if they are registered as having attended that consequence.

- Employ Infrastructure-as-Code for all deject resources to make it easy to whorl this out to multiple environments. (No using the AWS Console for mutable operations here 🚫🤠)

Considering implementation options

Having congenital similar functionality in the past using non-serverless technologies (east.g. in Express.js), my initial approach was to investigate how to use a Lambda-backed API Gateway endpoint that would handle everything: authentication, dominance, file upload and finally writing the S3 location and metadata to the database. While this approach is valid and achievable, information technology does have a few limitations:

- You demand to write code inside your Lambda to manage the multipart file upload and the edge cases around this, whereas the existing S3 SDKs are already optimized for this.

- Lambda pricing is duration-based so for larger files your part will take longer to complete, costing you more.

- API Gateway has a payload size hard limit of 10MB. Contrast that to the S3 file size limit of 5GB.

Using S3 presigned URLs for upload

Later further inquiry, I found a better solution involving uploading objects to S3 using presigned URLs every bit a means of both providing a pre-upload authority check and as well pre-tagging the uploaded photo with structured metadata.

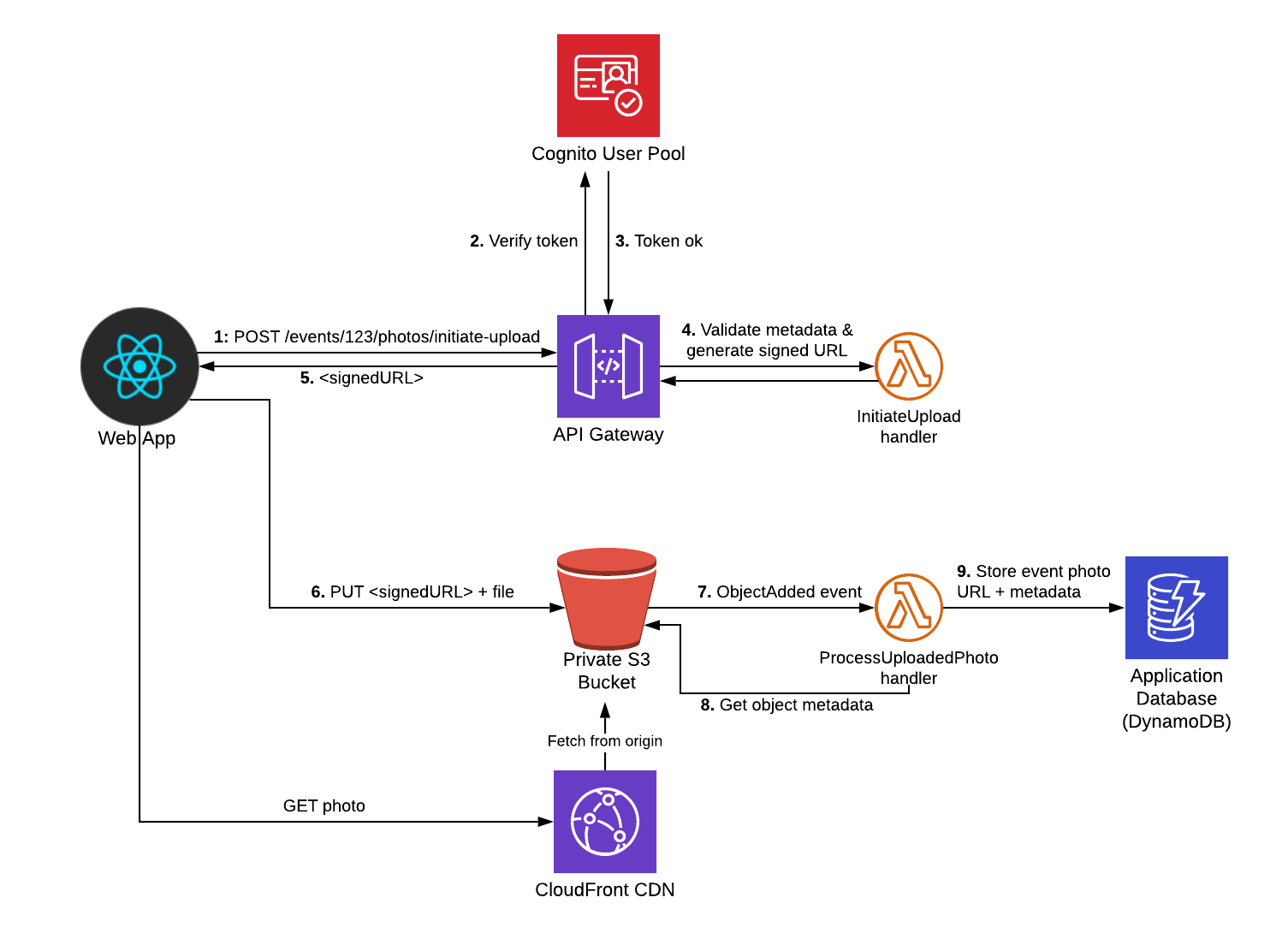

The diagram below shows the request flow from a web app.

The main thing to notice is that from the web client'due south point of view, it's a 2-pace process:

- Initiate the upload request, sending metadata related to the photo (e.g. eventId, championship, description, etc). The API then does an auth cheque, executes business logic (e.one thousand. restricting access only to users who have attended the event) and finally generates and responds with a secure presigned URL.

- Upload the file itself using the presigned URL.

I'm using Cognito as my user store hither but you could easily swap this out for a custom Lambda Authorizer if your API uses a dissimilar auth mechanism.

Let'south dive in…

Step 1: Create the S3 bucket

I use the Serverless Framework to manage configuration and deployment of all my cloud resource. For this app, I use 2 split "services" (or stacks), that can be independently deployed:

-

infraservice: this contains the S3 bucket, CloudFront distribution, DynamoDB table and Cognito User Pool resources. -

photos-apiservice: this contains the API Gateway and Lambda functions.

You tin can view the full configuration of each stack in the Github repo, but we'll cover the key points beneath.

The S3 saucepan is divers as follows:

resources : Resources : PhotosBucket : Type : AWS: :S3: :Bucket Backdrop : BucketName : !Sub '${self:custom.photosBucketName}' AccessControl : Individual CorsConfiguration : CorsRules : - AllowedHeaders : ['*' ] AllowedMethods : ['PUT'] AllowedOrigins : ['*' ] The CORS configuration is important here equally without it your spider web customer won't be able to perform the PUT request later acquiring the signed URL. I'm also using CloudFront as the CDN in order to minimize latency for users downloading the photos. You tin view the config for the CloudFront distribution here. However, this is an optional component and if you lot'd rather clients read photos direct from S3 then you tin can change the AccessControl property higher up to be PublicRead.

Step 2: Create "Initiate Upload" API Gateway endpoint

Our next step is to add a new API path that the client endpoint can call to asking the signed URL. Requests to this volition look like then:

Post /events/{eventId}/photos/initiate-upload { "championship": "Keynote Speech", "description": "Steve walking out on stage", "contentType": "image/png" } Responses will contain an object with a single s3PutObjectUrl field that the customer can use to upload to S3. This URL looks like so:

https://s3.eu-west-1.amazonaws.com/eventsapp-photos-dev.sampleapps.winterwindsoftware.com/uploads/event_1234/1d80868b-b05b-4ac7-ae52-bdb2dfb9b637.png?AWSAccessKeyId=XXXXXXXXXXXXXXX&Cache-Control=max-age%3D31557600&Content-Type=image%2Fpng&Expires=1571396945&Signature=F5eRZQOgJyxSdsAS9ukeMoFGPEA%3D&ten-amz-meta-contenttype=image%2Fpng&x-amz-meta-clarification=Steve%20walking%20out%20on%20stage&x-amz-meta-eventid=1234&ten-amz-meta-photoid=1d80868b-b05b-4ac7-ae52-bdb2dfb9b637&x-amz-meta-title=Keynote%20Speech&x-amz-security-token=XXXXXXXXXX

Notice in particular these fields embedded in the query string:

-

x-amz-meta-Thirty— These fields contain the metadata values that ourinitiateUploadLambda function will gear up. -

x-amz-security-token— this contains the temporary security token used for authenticating with S3 -

Signature— this ensures that the PUT request cannot be altered past the client (e.g. by changing metadata values)

The post-obit excerpt from serverless.yml shows the function configuration:

# serverless.yml service : eventsapp-photos-api … custom : appName : eventsapp infraStack : ${self:custom.appName} -infra-${self:provider.stage} awsAccountId : ${cf:${self:custom.infraStack}.AWSAccountId} apiAuthorizer : arn : arn:aws:cognito-idp:${cocky:provider.region} :${self:custom.awsAccountId} :userpool/${cf:${self:custom.infraStack}.UserPoolId} corsConfig : true functions : … httpInitiateUpload : handler : src/http/initiate-upload.handler iamRoleStatements : - Effect : Allow Action : - s3:PutObject Resource : arn:aws:s3: : :${cf:${self:custom.infraStack}.PhotosBucket}* events : - http : path : events/{eventId}/photos/initiate-upload method : post authorizer : ${self:custom.apiAuthorizer} cors : ${self:custom.corsConfig} A few things to note here:

- The

httpInitiateUploadLambda role will handle POST requests to the specified path. - The Cognito user pool (output from the

infrastack) is referenced in the role'southwardauthorizerproperty. This makes sure requests without a valid token in theAuthorizationHTTP header are rejected past API Gateway. - CORS is enabled for all API endpoints

- Finally, the

iamRoleStatementsholding creates an IAM part that this function will run as. This function allowsPutObjectactions against the S3 photos bucket. It is especially important that this permission set follows the to the lowest degree privilege principle as the signed URL returned to the client contains a temporary admission token that allows the token holder to assume all the permissions of the IAM part that generated the signed URL.

At present let'southward look at the handler lawmaking:

import S3 from 'aws-sdk/clients/s3' ; import uuid from 'uuid/v4' ; import { InitiateEventPhotoUploadResponse, PhotoMetadata } from '@mutual/schemas/photos-api' ; import { isValidImageContentType, getSupportedContentTypes, getFileSuffixForContentType } from '@svc-utils/image-mime-types' ; import { s3 as s3Config } from '@svc-config' ; import { wrap } from '@common/middleware/apigw' ; import { StatusCodeError } from '@mutual/utils/errors' ; const s3 = new S3 ( ) ; export const handler = wrap ( async (consequence) => { // Read metadata from path/body and validate const eventId = event.pathParameters! .eventId; const torso = JSON . parse (event.body || '{}' ) ; const photoMetadata: PhotoMetadata = { contentType: body.contentType, title: body.title, clarification: body.description, } ; if ( ! isValidImageContentType (photoMetadata.contentType) ) { throw new StatusCodeError ( 400 , ` Invalid contentType for paradigm. Valid values are: ${ getSupportedContentTypes ( ) . join ( ',' ) } ` ) ; } // TODO: Add whatever further business organisation logic validation here (e.grand. that current user has write access to eventId) // Create the PutObjectRequest that will be embedded in the signed URL const photoId = uuid ( ) ; const req: S3 .Types.PutObjectRequest = { Bucket: s3Config.photosBucket, Key: ` uploads/event_ ${eventId} / ${photoId} . ${ getFileSuffixForContentType (photoMetadata.contentType) ! } ` , ContentType: photoMetadata.contentType, CacheControl: 'max-historic period=31557600' , // instructs CloudFront to cache for ane year // Gear up Metadata fields to be retrieved mail-upload and stored in DynamoDB Metadata: { ... (photoMetadata as any ) , photoId, eventId, } , } ; // Become the signed URL from S3 and render to customer const s3PutObjectUrl = expect s3. getSignedUrlPromise ( 'putObject' , req) ; const effect: InitiateEventPhotoUploadResponse = { photoId, s3PutObjectUrl, } ; return { statusCode: 201 , body: JSON . stringify (result) , } ; } ) ; The s3.getSignedUrlPromise is the chief line of involvement here. Information technology serializes a PutObject request into a signed URL.

I'm using a wrap middleware office in order to handle cross-cutting API concerns such as adding CORS headers and uncaught fault logging.

Step three: Uploading file from the web app

Now to implement the client logic. I've created a very basic (read: ugly) create-react-app example (code here). I used Amplify'southward Auth library to manage the Cognito authentication and then created a PhotoUploader React component which makes use of the React Dropzone library:

// components/Photos/PhotoUploader.tsx import React, { useCallback } from 'react' ; import { useDropzone } from 'react-dropzone' ; import { uploadPhoto } from '../../utils/photos-api-customer' ; const PhotoUploader: React. FC < { eventId: string } > = ( { eventId } ) => { const onDrop = useCallback ( async ( files: File[ ] ) => { console . log ( 'starting upload' , { files } ) ; const file = files[ 0 ] ; try { const uploadResult = look uploadPhoto (eventId, file, { // should enhance this to read championship and description from text input fields. title: 'my title' , description: 'my description' , contentType: file. type , } ) ; console . log ( 'upload complete!' , uploadResult) ; return uploadResult; } grab (error) { console . fault ( 'Fault uploading' , error) ; throw error; } } , [eventId] ) ; const { getRootProps, getInputProps, isDragActive } = useDropzone ( { onDrop } ) ; return ( <div { ... getRootProps ( ) } > <input { ... getInputProps ( ) } / > { isDragActive ? <p > Drop the files here ... </p > : <p > Drag and drop some files hither, or click to select files </p > } </div > ) ; } ; export default PhotoUploader; // utils/photos-api-client.ts import { API , Auth } from 'aws-amplify' ; import axios, { AxiosResponse } from 'axios' ; import config from '../config' ; import { PhotoMetadata, InitiateEventPhotoUploadResponse, EventPhoto } from '../../../../services/common/schemas/photos-api' ; API . configure (config.amplify. API ) ; const API_NAME = 'PhotosAPI' ; async role getHeaders ( ) : Promise < any > { // Set auth token headers to be passed in all API requests const headers: any = { } ; const session = await Auth. currentSession ( ) ; if (session) { headers.Dominance = ` ${session. getIdToken ( ) . getJwtToken ( ) } ` ; } render headers; } export async function getPhotos ( eventId: cord ) : Promise <EventPhoto[ ] > { return API . go ( API_NAME , ` /events/ ${eventId} /photos ` , { headers: look getHeaders ( ) } ) ; } export async function uploadPhoto ( eventId: cord, photoFile: whatsoever, metadata: PhotoMetadata, ) : Promise <AxiosResponse> { const initiateResult: InitiateEventPhotoUploadResponse = await API . postal service ( API_NAME , ` /events/ ${eventId} /photos/initiate-upload ` , { body: metadata, headers: expect getHeaders ( ) } , ) ; return axios. put (initiateResult.s3PutObjectUrl, photoFile, { headers: { 'Content-Blazon' : metadata.contentType, } , } ) ; } The uploadPhoto role in the photos-api-client.ts file is the key here. It performs the 2-step process we mentioned before by showtime calling our initiate-upload API Gateway endpoint and so making a PUT asking to the s3PutObjectUrl information technology returned. Make sure that y'all set the Content-Type header in your S3 put request, otherwise it will be rejected as non matching the signature.

Stride iv: Pushing photo data into database

Now that the photo has been uploaded, the spider web app will demand a way of list all photos uploaded for an outcome (using the getPhotos role in a higher place).

To close this loop and make this query possible, we need to record the photo information in our database. We do this by creating a second Lambda function processUploadedPhoto that is triggered whenever a new object is added to our S3 bucket.

Let's look at its config:

# serverless.yml service : eventsapp-photos-api … functions : … s3ProcessUploadedPhoto : handler : src/s3/process-uploaded-photograph.handler iamRoleStatements : - Event : Let Action : - dynamodb:Query - dynamodb:Browse - dynamodb:GetItem - dynamodb:PutItem - dynamodb:UpdateItem Resource : arn:aws:dynamodb:${self:provider.region} :${self:custom.awsAccountId} :table/${cf:${self:custom.infraStack}.DynamoDBTablePrefix}* - Issue : Let Action : - s3:GetObject - s3:HeadObject Resource : arn:aws:s3: : :${cf:${self:custom.infraStack}.PhotosBucket}* events : - s3 : saucepan : ${cf:${self:custom.infraStack}.PhotosBucket} event : s3:ObjectCreated:* rules : - prefix : uploads/ existing : true It'south triggered off the s3:ObjectCreated event and volition only burn for files added beneath the uploads/ meridian-level binder. In the iamRoleStatements section, nosotros are assuasive the role to write to our DynamoDB table and read from the S3 Bucket.

At present let'southward wait at the function code:

import { S3Event } from 'aws-lambda' ; import S3 from 'aws-sdk/clients/s3' ; import log from '@mutual/utils/log' ; import { EventPhotoCreate } from '@common/schemas/photos-api' ; import { cloudfront } from '@svc-config' ; import { savePhoto } from '@svc-models/event-photos' ; const s3 = new S3 ( ) ; consign const handler = async (issue: S3Event) : Promise < void > => { const s3Record = event.Records[ 0 ] .s3; // Get-go fetch metadata from S3 const s3Object = await s3. headObject ( { Bucket: s3Record.bucket.proper noun, Key: s3Record.object.key } ) . promise ( ) ; if ( !s3Object.Metadata) { // Shouldn't get here const errorMessage = 'Cannot process photo as no metadata is set for it' ; log. error (errorMessage, { s3Object, event } ) ; throw new Mistake (errorMessage) ; } // S3 metadata field names are converted to lowercase, so need to map them out carefully const photoDetails: EventPhotoCreate = { eventId: s3Object.Metadata.eventid, description: s3Object.Metadata.description, title: s3Object.Metadata.title, id: s3Object.Metadata.photoid, contentType: s3Object.Metadata.contenttype, // Map the S3 saucepan key to a CloudFront URL to be stored in the DB url: ` https:// ${cloudfront.photosDistributionDomainName} / ${s3Record.object.key} ` , } ; // At present write to DDB look savePhoto (photoDetails) ; } ; The event object passed to the Lambda handler part just contains the bucket name and key of the object that triggered it. So in order to fetch the metadata, we need to use the headObject S3 API phone call. In one case nosotros've extracted the required metadata fields, we then construct a CloudFront URL for the photo (using the CloudFront distribution'southward domain proper noun passed in via an environs variable) and relieve to DynamoDB.

Future enhancements

A potential enhancement that could exist fabricated to the upload flow is to add in an image optimization step before saving information technology to the database. This would involve a having a Lambda function heed for S3:ObjectCreated events below the upload/ fundamental prefix which and then reads the image file, resizes and optimizes it accordingly and then saves the new copy to the same bucket but under a new optimized/ key prefix. The config of our Lambda part that saves to the database should then be updated to be triggered off this new prefix instead.

Other manufactures you might enjoy:

Source: https://serverlessfirst.com/serverless-photo-upload-api/

Post a Comment for "Api Gateway Upload File to S3 and Run Lambda"